Spectator Cockroaches, Sand, and the Social Facilitation of Skeuomorphs.

It was an experiment on cyclists in 1898 that first showed us how we might live with robots. It's a really interesting observation. Let me tell you about it.

So. I love bots. Give me a pseudo-human interface, a smattering of natural language, and a computery-voice, and I'm all yours. This year I'm working on a project to discover just how useful they can be. With wearables, and systems like Amazon Echo we're about need to deal with a lot of these things, and it seems to me it's not so much the technology as the user psychology that we need to pay the most attention to, and so we need to ask what we know about these things already.

Discussing this with Dr Krotoski, my very local social psychologist, I was pointed to the seminal paper, The Dynamogenic Factors in Pacemaking and Competition, by Norman Triplett, The American Journal of Psychology Vol. 9, No. 4 (Jul., 1898) , pp. 507-533.

This is basically the ur-text of Social Psychology. You can read the original paper for details of the experiment, but the simplified conclusion was this: if a person is being watched, they find easy things easier, and harder things harder. It turns out, from other experiments, that this is true for many species. For example, cockroaches will find their way through mazes much more quickly if they have spectators too, (Zajonc, R. B. (1965). Social facilitation. Science, 149, 269-274.)

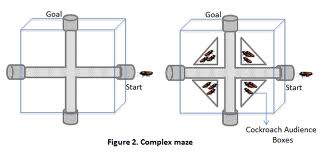

A maze for cockroaches, with spectator seating.

Further research, specifically Social facilitation effects of virtual humans, Park, Hum Factors. 2007 Dec;49(6):1054-60, went on to the nub of it: "Virtual Humans" produce the same social facilitation effect. In other words, the presence of a bot will make simple things simpler, and hard things harder, simply by just being there "watching".

This, it seems to me, is quite a big deal. If we're designing systems with even a hint of skeuomorphic similarity to a conscious thing - even if it just has a smiley face and a pretty voice - it might make sense for it to ostentatiously absent itself when it detects the user doing something difficult. This might be the post-singularity reading of the Footprints In The Sand story, but nerd-rapture aside, it's an interesting question: when is it best for context-aware technology to decide to disappear? When the going is easy, or when the going gets tough?

Furthermore, I'm not sure if we know yet the Uncanny Valley-like threshold of "humanness" that triggers the social facilitation effect: do cameras have the same effect? Or even just the knowledge that someone is surveilling you? But this has serious implications beyond AI design.

For example, the trend for the quantified workplace, where managers can gather statistical data on their employees' activities, might be counterproductive, not simply because of the sheer awfulness of metrics, but because the knowledge they are being watched might make the more complex tasks that employee needs to do inherently more difficult, and hence more unlikely to be attempted in the first place.

For the most challenging tasks we face, the problems requiring the most cognitive effort, and the most imaginative approaches, we may find that many of our current social addictions - surveillance, testing, and so on, might be deeply harmful. "It looks like you're writing a letter," as Clippy would say, "would you like me to make that sub-conciously more difficult for you?"